\[ \newcommand{\nRV}[2]{{#1}_1, {#1}_2, \ldots, {#1}_{#2}} \newcommand{\pnRV}[3]{{#1}_1^{#3}, {#1}_2^{#3}, \ldots, {#1}_{#2}^{#3}} \newcommand{\onRV}[2]{{#1}_{(1)} \le {#1}_{(2)} \le \ldots \le {#1}_{(#2)}} \newcommand{\RR}{\mathbb{R}} \newcommand{\Prob}[1]{\mathbb{P}\left({#1}\right)} \newcommand{\PP}{\mathcal{P}} \newcommand{\iidd}{\overset{\mathsf{iid}}{\sim}} \newcommand{\X}{\times} \newcommand{\EE}[1]{\mathbb{E}\left[{#1}\right]} \newcommand{\Var}[1]{\mathsf{Var}\left({#1}\right)} \newcommand{\Ber}[1]{\mathsf{Ber}\left({#1}\right)} \newcommand{\Geom}[1]{\mathsf{Geom}\left({#1}\right)} \newcommand{\Bin}[1]{\mathsf{Bin}\left({#1}\right)} \newcommand{\Poi}[1]{\mathsf{Pois}\left({#1}\right)} \newcommand{\Exp}[1]{\mathsf{Exp}\left({#1}\right)} \newcommand{\SD}[1]{\mathsf{SD}\left({#1}\right)} \newcommand{\sgn}[1]{\mathsf{sgn}} \newcommand{\dd}[1]{\operatorname{d}\!{#1}} \]

2.1 Recalling the distributions, Expectation, Variance

In this section we will recall some of the most important distributions, their properties and their expectation and variance.

2.1.1 Discrete Distributions

A random variable \(X\) is discrete when it takes values from a countable set. It is characterized by a probability mass function \(p(x)\), where \(\Prob{X = x} = p(x)\) for any \(x \in \textsf{Range}(X)\). The expectation of \(X\) is defined as \[ \EE{X} = \sum_{x \in \textsf{Range}(X)} x p(x) \] and the variance as \[ \Var{X} = \EE{(X - \EE{X})^2} = \EE{X^2} - \EE{X}^2 \]

Here are some of the most important discrete distributions you need to know.

Discrete Uniform Distribution

Let \(X\) be a random variable that takes values \(1, 2, \ldots, n\) with equal probability. Then \(X\) is said to have a discrete uniform distribution. We write \(X \sim \mathsf{Unif}(\{1, 2, \ldots, n\})\).

The probability mass function of \(X\) is given by

\[\begin{align*} \Prob{X = x} & = \begin{cases} \frac{1}{n} & \textsf{if } x \in \{1, 2, \ldots, n\} \\ 0 & \textsf{otherwise} \end{cases} \\ & = \frac{1}{n} \mathbb{1}_{[n]}(x) \end{align*}\]

Its expectation and variance are given by \[ \EE{X} = \frac{n+1}{2} \quad \textsf{and} \quad \Var{X} = \frac{n^2 - 1}{12} \]

Bernoulli Distribution

Let \(X\) be a random variable that takes value \(1\) with probability \(p\) and \(0\) with probability \(1-p\). Then \(X\) is said to have a Bernoulli distribution with parameter \(p\). We write \(X \sim \Ber{p}\) or \(X \sim \mathsf{Bernoulli}(p)\).

The probability mass function of \(X\) is given by

\[\begin{align*} \Prob{X = x} & = \begin{cases} p & \textsf{if } x = 1 \\ 1-p & \textsf{if } x = 0 \\ 0 & \textsf{otherwise} \end{cases} \\ & = p^x (1-p)^{1-x} \end{align*}\]

Its expectation and variance are given by \[ \EE{X} = p \quad \textsf{and} \quad \Var{X} = p(1-p) \]

Binomial Distribution

Let \(X\) be a random variable that takes values \(0, 1, \ldots, n\) with probability mass function given by \[\begin{align*} \Prob{X = k} & = \binom{n}{k} p^k (1-p)^{n-k} \end{align*}\] where \(p \in [0, 1]\) and \(n \in \mathbb{N}\). Then \(X\) is said to have a binomial distribution with parameters \(n\) and \(p\). We write \(X \sim \Bin{n, p}\) or \(X \sim \mathsf{Binomial}(n, p)\).

Its expectation and variance are given by \[ \EE{X} = np \quad \textsf{and} \quad \Var{X} = np(1-p) \]

Geometric Distribution

Let \(X\) be a random variable that takes values \(1, 2, \ldots\) with probability mass function given by \[\begin{align*} \Prob{X = k} & = (1-p)^{k-1} p \end{align*}\] where \(p \in [0, 1]\). Then \(X\) is said to have a geometric distribution with parameter \(p\). We write \(X \sim \Geom{p}\) or \(X \sim \mathsf{Geometric}(p)\).

Its expectation and variance are given by \[ \EE{X} = \frac{1}{p} \quad \textsf{and} \quad \Var{X} = \frac{1-p}{p^2} \]

Poisson Distribution

Let \(X\) be a random variable that takes values \(0, 1, 2, \ldots\) with probability mass function given by \[\begin{align*} \Prob{X = k} & = \frac{\lambda^k}{k!} e^{-\lambda} \end{align*}\] where \(\lambda > 0\). Then \(X\) is said to have a Poisson distribution with parameter \(\lambda\). We write \(X \sim \Poi{\lambda}\) or \(X \sim \mathsf{Poisson}(\lambda)\).

Its expectation and variance are given by \[ \EE{X} = \lambda \quad \textsf{and} \quad \Var{X} = \lambda \]

2.1.2 Continuous Distributions

A random variable \(X\) is continuous if a non-negative function \(f\) exists, satisfying \[ \Prob{a \leq X \leq b} = \int_a^b f(x) \, \dd{x} \] for any \(a, b \in \R\) with \(a < b\). The function \(f\) is the probability density function of \(X\). The expectation of \(X\) is given by \[ \EE{X} = \int_{\textsf{Range}(X)} x f(x) \, \dd{x} \] if \(\int_{\textsf{Range}(X)} |x| f(x) \, \dd{x}\) is finite. The variance of \(X\) is defined as \[ \Var{X} = \EE{(X - \EE{X})^2} = \EE{X^2} - \EE{X}^2 \]

Below are some continuous distributions you should be familiar with.

Uniform Distribution

Let \(X\) be a random variable that takes values in the interval \([a, b]\)where \(a, b \in \R\) and \(a < b\), with probability density function given by \[\begin{align*} f(x) = \frac{1}{b-a} \end{align*}\] Then \(X\) is said to have a uniform distribution on \([a, b]\). We write \(X \sim \mathsf{Unif}[a, b]\).

Its expectation and variance are given by \[ \EE{X} = \frac{a+b}{2} \quad \textsf{and} \quad \Var{X} = \frac{(b-a)^2}{12} \]

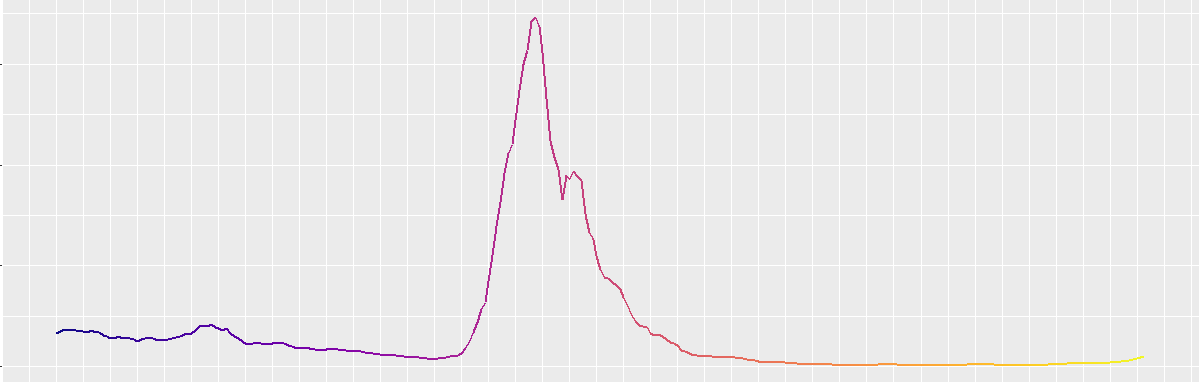

Exponential Distribution

Let \(X\) be a random variable that takes values in \(\R_{\geq 0}\) with probability density function given by \[\begin{align*} f(x) & = \begin{cases} \lambda e^{-\lambda x} & \textsf{if } x \geq 0 \\ 0 & \textsf{otherwise} \end{cases} \end{align*}\] where \(\lambda > 0\). Then \(X\) is said to have an exponential distribution with parameter \(\lambda\). We write \(X \sim \Exp{\lambda}\) or \(X \sim \mathsf{Exponential}(\lambda)\).

Its expectation and variance are given by \[ \EE{X} = \frac{1}{\lambda} \quad \textsf{and} \quad \Var{X} = \frac{1}{\lambda^2} \]

Normal Distribution

Let \(X\) be a random variable that takes values in \(\R\) with probability density function given by \[\begin{align*} f(x) & = \frac{1}{\sqrt{2\pi\sigma^2}} e^{-\frac{(x-\mu)^2}{2\sigma^2}} \end{align*}\] where \(\mu \in \R\) and \(\sigma > 0\). Then \(X\) is said to have a normal distribution with parameters \(\mu\) and \(\sigma\). We write \(X \sim \mathsf{Normal}(\mu, \sigma^2)\) or \(X \sim N(\mu, \sigma^2)\).

Its expectation and variance are given by \[ \EE{X} = \mu \quad \textsf{and} \quad \Var{X} = \sigma^2 \]

Gamma Distribution

Let \(X\) be a random variable that takes values in \(\R_{\geq 0}\) with probability density function given by \[\begin{align*} f(x) & = \begin{cases} \frac{\lambda^\alpha}{\Gamma(\alpha)} x^{\alpha-1} e^{-\lambda x} & \textsf{if } x \geq 0 \\ 0 & \textsf{otherwise} \end{cases} \end{align*}\] where \(\alpha, \lambda > 0\) and \(\Gamma(\alpha) = \int_0^\infty x^{\alpha-1} e^{-x} \, \dd{x}\) is the gamma function. Then \(X\) is said to have a gamma distribution with parameters \(\alpha\) and \(\lambda\). We write \(X \sim \mathsf{Gamma}(\alpha, \lambda)\) or \(X \sim \Gamma(\alpha, \lambda)\).

Its expectation and variance are given by \[ \EE{X} = \frac{\alpha}{\lambda} \quad \textsf{and} \quad \Var{X} = \frac{\alpha}{\lambda^2} \]

Beta Distribution

Let \(X\) be a random variable that takes values in \([0, 1]\) with probability density function given by

\[\begin{align*} f(x) & = \begin{cases} \frac{x^{\alpha-1} (1-x)^{\beta-1}}{B(\alpha, \beta)} & \textsf{if } x \in [0, 1] \\ 0 & \textsf{otherwise} \end{cases} \end{align*}\]

where \(\alpha, \beta > 0\) and \(B(\alpha, \beta) = \int_0^1 x^{\alpha-1} (1-x)^{\beta-1} \, \dd{x}\) is the beta function. Then \(X\) is said to have a beta distribution with parameters \(\alpha\) and \(\beta\). We write \(X \sim \mathsf{Beta}(\alpha, \beta)\).

Its expectation and variance are given by \[ \EE{X} = \frac{\alpha}{\alpha + \beta} \quad \textsf{and} \quad \Var{X} = \frac{\alpha \beta}{(\alpha + \beta)^2 (\alpha + \beta + 1)} \]