\[ \newcommand{\nRV}[2]{{#1}_1, {#1}_2, \ldots, {#1}_{#2}} \newcommand{\pnRV}[3]{{#1}_1^{#3}, {#1}_2^{#3}, \ldots, {#1}_{#2}^{#3}} \newcommand{\onRV}[2]{{#1}_{(1)} \le {#1}_{(2)} \le \ldots \le {#1}_{(#2)}} \newcommand{\RR}{\mathbb{R}} \newcommand{\Prob}[1]{\mathbb{P}\left({#1}\right)} \newcommand{\PP}{\mathcal{P}} \newcommand{\iidd}{\overset{\mathsf{iid}}{\sim}} \newcommand{\X}{\times} \newcommand{\EE}[1]{\mathbb{E}\left[{#1}\right]} \newcommand{\Var}[1]{\mathsf{Var}\left({#1}\right)} \newcommand{\Ber}[1]{\mathsf{Ber}\left({#1}\right)} \newcommand{\Geom}[1]{\mathsf{Geom}\left({#1}\right)} \newcommand{\Bin}[1]{\mathsf{Bin}\left({#1}\right)} \newcommand{\Poi}[1]{\mathsf{Pois}\left({#1}\right)} \newcommand{\Exp}[1]{\mathsf{Exp}\left({#1}\right)} \newcommand{\SD}[1]{\mathsf{SD}\left({#1}\right)} \newcommand{\sgn}[1]{\mathsf{sgn}} \newcommand{\dd}[1]{\operatorname{d}\!{#1}} \]

3.7 Method of least squares (Linear regression)

Exercises

Exercise 3.16 (From R for Data Science, Section 23) Consider the simulated data set sim1 in modelr library. Do the following without using tibble

Using

ggplot()provide a scatter plot of thesim$yversussim1$x.Assume that \(m \sim U(-5, 5)\) and \(c \sim U(-20, 40)\). Generate \(100\) lines with slopes \(m\) and intercept \(c\). Plot all the lines layered on top of the scatter plot done above.

Using the below function

compute the residual sum of squares for each of the lines.

Using

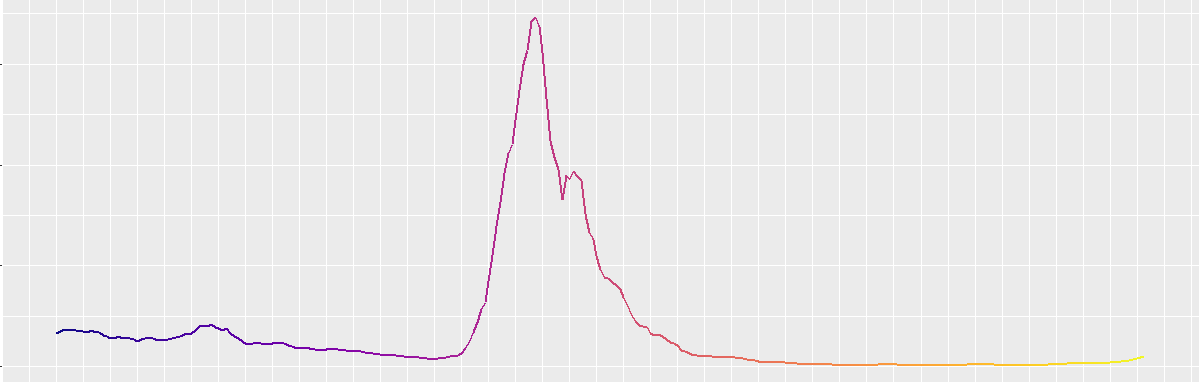

ggplot(), the inbuilt functionrank()andfilter()plot the 10 best lines (i.e.,10 lowestrss) along with the data points. Colour the BRL := best random line inviridis plasma red.Understand

optim()function and the commandDescribe the output of the code decide what

ls_fit$parprovide and call this BOL := best optim line.Use the inbuilt

lm()function to compute the slope and intercept of least square line and the line LSL := least square line.For LSL, BOL, BRL compute the residuals using the function given below

and provide three plots of the same as a histogram and scatter plot.

Exercise 3.17 (Bosokovitch's best fit line) Write an R-code that uses the optim() or optimize() function and solves Bosokovitch’s formulation of finding the best line? That is for data points \(\{x_i, y_i\}_{i=1}^{n}\) find \(m, c\) that minimizes

\[\begin{align}

\tag{3.17}

\sum_{k=1}^{n} \left| y_k - mx_k - c \right|

\end{align}\]