\[ \newcommand{\nRV}[2]{{#1}_1, {#1}_2, \ldots, {#1}_{#2}} \newcommand{\pnRV}[3]{{#1}_1^{#3}, {#1}_2^{#3}, \ldots, {#1}_{#2}^{#3}} \newcommand{\onRV}[2]{{#1}_{(1)} \le {#1}_{(2)} \le \ldots \le {#1}_{(#2)}} \newcommand{\RR}{\mathbb{R}} \newcommand{\Prob}[1]{\mathbb{P}\left({#1}\right)} \newcommand{\PP}{\mathcal{P}} \newcommand{\iidd}{\overset{\mathsf{iid}}{\sim}} \newcommand{\X}{\times} \newcommand{\EE}[1]{\mathbb{E}\left[{#1}\right]} \newcommand{\Var}[1]{\mathsf{Var}\left({#1}\right)} \newcommand{\Ber}[1]{\mathsf{Ber}\left({#1}\right)} \newcommand{\Geom}[1]{\mathsf{Geom}\left({#1}\right)} \newcommand{\Bin}[1]{\mathsf{Bin}\left({#1}\right)} \newcommand{\Poi}[1]{\mathsf{Pois}\left({#1}\right)} \newcommand{\Exp}[1]{\mathsf{Exp}\left({#1}\right)} \newcommand{\SD}[1]{\mathsf{SD}\left({#1}\right)} \newcommand{\sgn}[1]{\mathsf{sgn}} \newcommand{\dd}[1]{\operatorname{d}\!{#1}} \]

2.2 Central Limit Theorem

Theorem 2.1 Let \(X_1, X_2, \dots, X_n\) be i.i.d. random variables with mean \(\mu\) and variance \(\sigma^2\). Let, \(S_n = \sum_{i=1}^{n} X_i\). Then, for any \(x \in \R\), \[\begin{equation} \lim_{n \to \infty} \Prob{ \frac{S_n - n\mu}{\sigma\sqrt{n}} \leq x } = \int_{-\infty}^{x} \frac{1}{\sqrt{2\pi}}\exp{\left( -\frac{t^2}{2} \right)}\dd{t} \tag{2.1} \end{equation}\] In other words, \[\begin{equation} \frac{S_n - n\mu}{\sigma\sqrt{n}} \overset{d}{\longrightarrow} N(0,1) \tag{2.2} \end{equation}\]

The theorem says that the distribution of the standardized binomial random variable \(\frac{S_n - n\mu}{\sigma\sqrt{n}}\) converges to the standard normal distribution as \(n \to \infty\), irrespective of the original distribution of the \(X_i\)’s. Since a linear transformation of a normal random variable is again normal, we can conclude that for large \(n\), the distribution of \(S_n\) is approximately normal with mean \(n\mu\) and variance \(\sigma^2n\). Indeed, a different way to write the conclusion of the theorem is \[\begin{equation} \tag{2.3} \lim_{n \to \infty} \Prob{S_n \leq n\mu + x\sigma\sqrt{n}} = \int_{-\infty}^{x} \frac{1}{\sqrt{2\pi}}\exp{\left( -\frac{t^2}{2} \right)}\dd{t} \end{equation}\] and the right-hand side equals \[\begin{equation} \tag{2.4} \Prob{Z_n \leq n\mu + x\sigma\sqrt{n}} \end{equation}\] where \(Z_n \sim N(n\mu, n\sigma^2)\). The approximation in Equation (2.3) is often used in practice to approximate the probability of a binomial random variable. For example, if \(X \sim \Bin{100, 0.5}\), then we can approximate \(\Prob{X \leq 40} \approx \Phi\left(\frac{40.5 - 50}{5}\right) = \Phi(-1.9) = 0.0287\). The exact value is \(\Prob{X \leq 40} = 0.0284\). The approximation is quite good even for \(n = 10\).

Exercises

Exercise 2.1 (Simulating CLT) De Moivre’s Central Limit Theorem states the following:

Theorem 2.1 For \(X_{n} \sim \Bin{n,p}\) \[\begin{equation} \tag{2.5} \lim_{n \to \infty} \left| \Prob{ \frac{X_n-np}{\sqrt{np(1-p)}} \leq x } - \int_{-\infty}^{x} \frac{1}{\sqrt{2\pi}}\exp{\left( -\frac{t^2}{2} \right)}\dd{t} \right| = 0 \end{equation}\]

- Using the

rbinom()function generate 100 samples of \(\Bin{20, 0.5}\) and plot the histogram of the dataset. - Using the

rnorm()function generate 100 samples of \(N(10, 5)\) and plot the histogram of the dataset. - Provide a plot that can be thought off as a computer proof of the Central Limit Theorem.

Exercise 2.2 (Poisson Approximation) Do the following:

- Using the

rbinom()generate 100 samples of \(\Bin{2000, 0.001}\), save it in a dataframedf_binomialand plot the histogram of the data-set. - Using the

rpois()generate 100 samples of \(\Poi{2}\), save it in a dataframedf_poiand plot the histogram of the data set.

Think of ways you can enhance the above exercise to come up with a computer proof of the Poisson Approximation from Probability theory class.

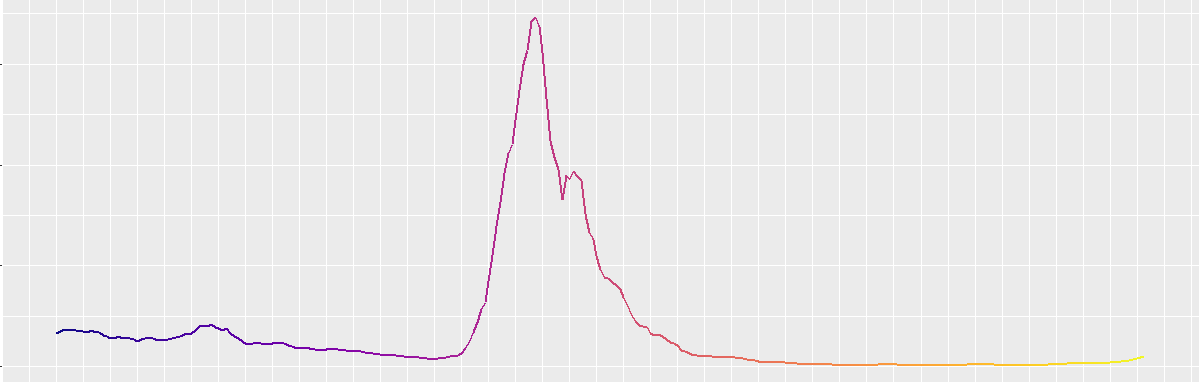

Exercise 2.3 (Berry-Eseen Type bound) The following result is a Berry-Eseen Type bound:

Theorem 2.2 Let, \(X_{n} \sim \Bin{n,p}\), then \(\exists\) \(C > 0\) such that \[\begin{equation} \tag{2.6} \sup_{x \in \R} \left| \Prob{ \frac{X_n-np}{\sqrt{np(1-p)}} \leq x } - \int_{\infty}^{x} \frac{1}{\sqrt{2\pi}}\exp{\left( -\frac{t^2}{2} \right)}\dd{t} \right| \leq C\frac{p^2 + (1-p)^2}{2\sqrt{np(1-p)}} \end{equation}\]

We shall prove it by simulation by the below algorithm.

For x = -2, -1.9, -1, 8,..., 0,..., 1.9, 2

using inbuilt pnorm() function find z[x] := pnorm(x)

Set p

For m = 1, 50, 100, 150,..., 1000

For x = -2, -1.9, -1, 8,..., 0,..., 1.9, 2

1) Generate bin_sample: 1000 Samples of Bin(m,p) using inbuilt rbinom() function

and Compute std_bin_sample := (bin_sample - m * p) / ((m * p * (1 - p))^(0.5))

2) Compute y[x]: the proportion of samples in std_bin_sample less than equal to x

3) Repeat steps 1) and 2) 100 times and compute average: mean_y[x] over each trial

4) Calculate diff[m] = max(abs(mean_y[x]- z[x]))

For m = 1, 50, 100, 150,..., 1000

Calculate error[m] := (p^2 + (1-p)^2) / (2 * (m * p * (1-p))^0.5)

Plot diff and error.